Exowheel

pneumatically augmented tactile steering wheel

Exowheel & Exoskin are the subject my masters thesis at the Tangible Media Group at the MIT Media Lab, which you can read in full here. Exowheel is a pneumatically augmented automotive steering wheel for tactile feedback.

Exowheel

pneumatically augmented tactile steering wheel

Traditional research into the area has relied on haptic feedback to create a tactile interface. Shape & texture change have a greater ability to create more perceivable, understandable, and intuitive stimuli and I sought to use recent advancements in programmable material prototyping to harness this.

By incorporating Exoskin (shown next), Exowheel is able to transform its surface dynamically to create a customized grip for each individual user, on-the-fly, as well as to adapt the grip during the drive, as the car moves from congested city driving to rougher rural roads.

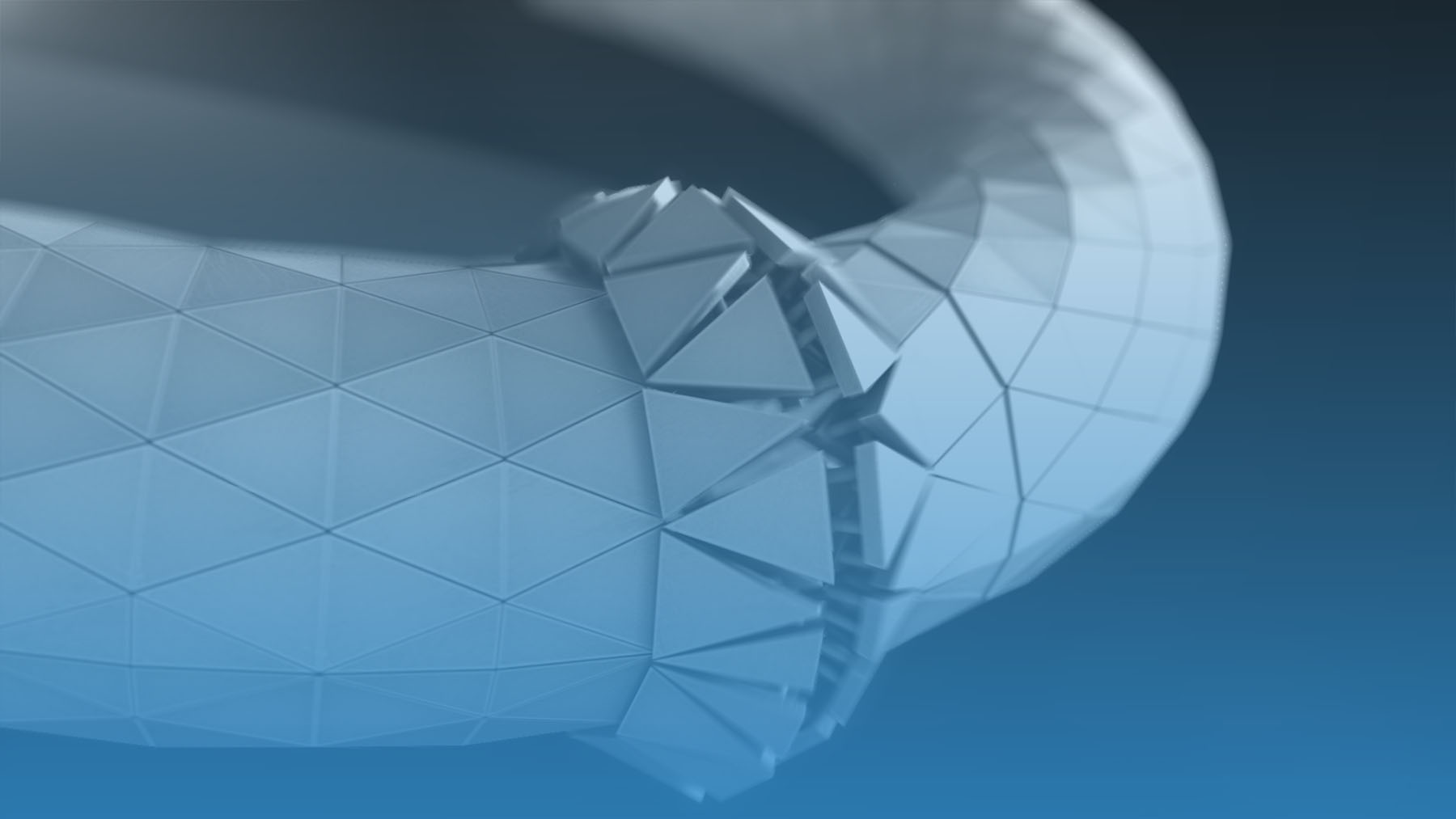

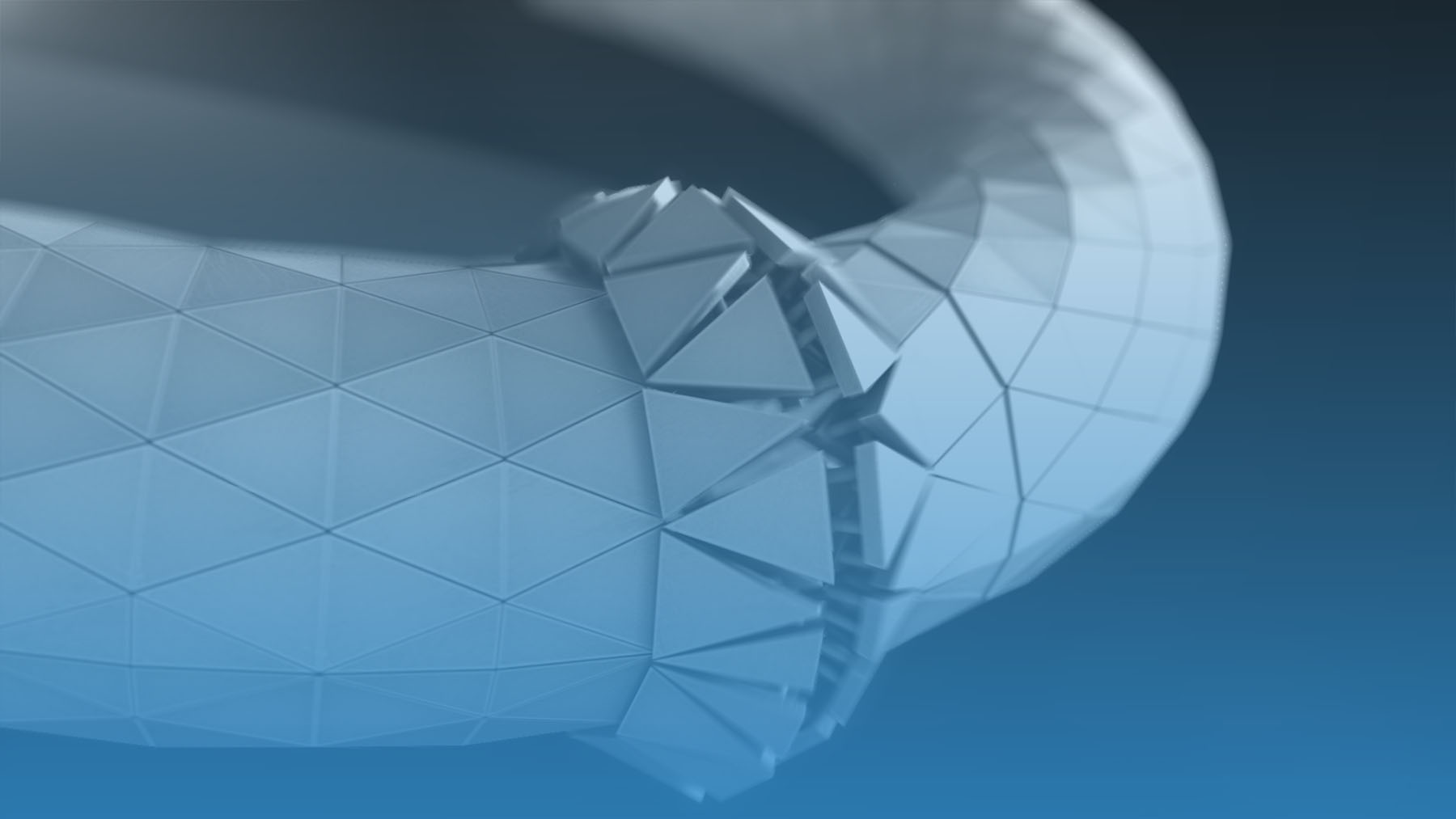

For the final panelization design and modeling, I used Grasshopper for Rhino, so that changes made to the design were instantly propagated through all parts of the model, avoiding the need for repetitive redrawing with each iteration.

This specific script used an accurately measured torus as the initial input. It then: (1) subdivides those surfaces into triangular panels (2) extrudes them (3) finds the 3 equidistant points of each trianglular surface and extrudes a cynlider between the faces and (4) finally connects everything together.

However, I envisioned Exoskin as a greater, more versatile platform for texture change, rather than purely focused for the automotive market. Because of its conformability, offloadable locus of actuation, and its integrability, Exoskin can be used in situations spanning wearable, product, and even furniture. By integrating different sets of inelastic materials and adjusting the overall scale of the composite, we can specifically tune Exoskin for each intended context.

Exoskin, provides a way to embed a multitude of static, rigid materials into actuatable, elastic membranes, allowing the new semi-rigid composites to sense, react, and compute. It is comprised of three key layers: (1) rigid layer: external “skin” controlling the perceived, tactile, and material qualities of the final composite (2) elastic layer: underlying driver to actuate system (3) sensing layer: provides capacitive feedback loop to sense interaction & deformation.

Exoskin

Prototype footage and concept application renders

By being able to better integrate these programmable membranes into more rigid materials, we significantly expand the design space and palette by enabling industrial designers, architects, and more to consider these material values when integrating shape & texture-change into their products: functionality, aesthetic quality, and emotional quality.

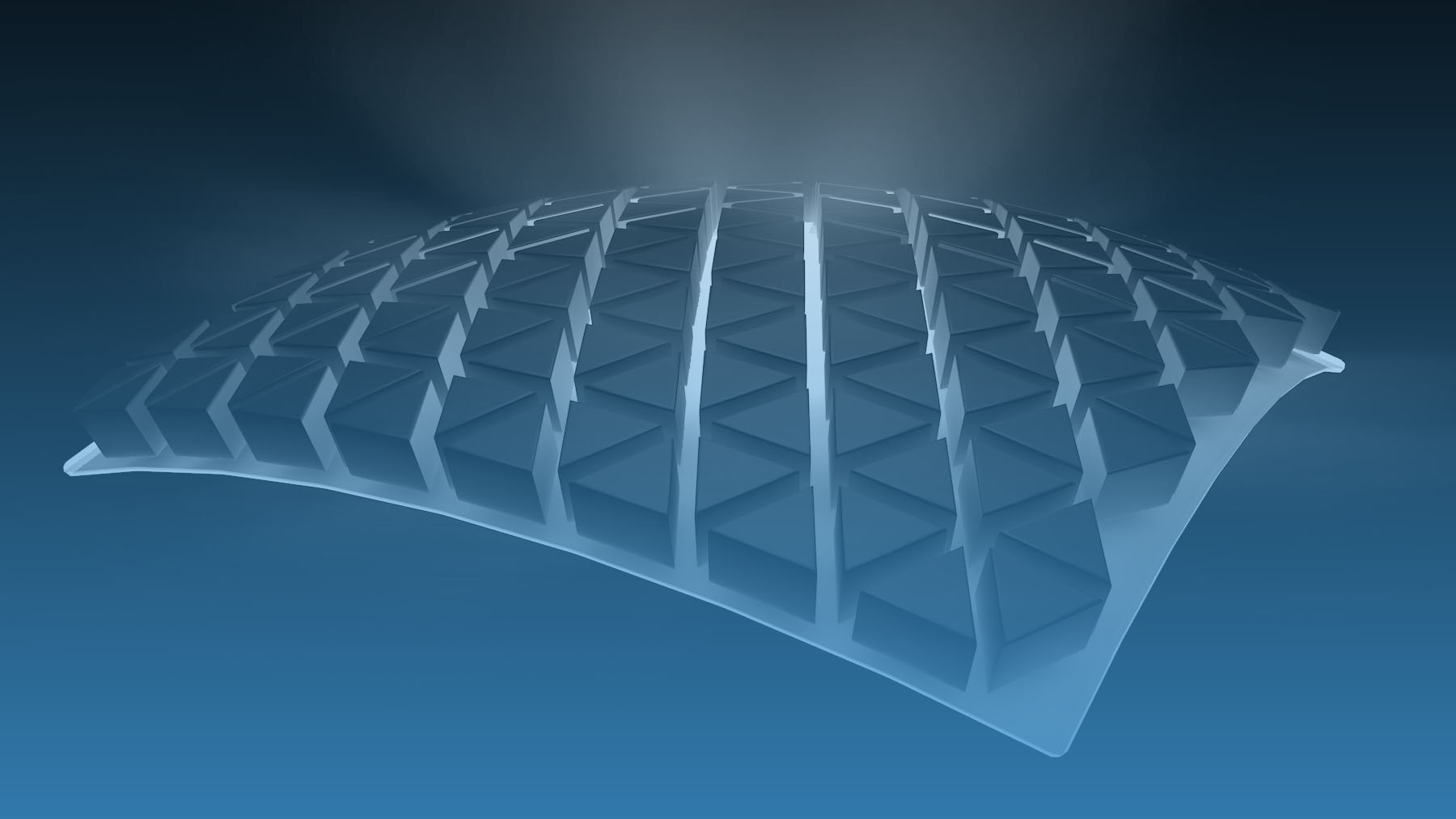

For the implementation of the test prototype samples created for Exoskin, I began with a variety of panelization methods for the rigid material layer, but quickly narrowed down to using a matched triangular mesh pattern for its aesthetic value, ease of fabrication, and to explore its light permeability control.

One interesting discovery found during this implementation process was anisotropy in the flexure deformation of the material using this triangular panelization method. Flexure in the Y axis caused the scales to group like diamonds while flexure in the Z axis caused them to group in rows.

Finally, I produced our last iteration that combined many of the actuation and sensing features I wanted to explore in a single prototype. Through parallel use of the Pneuduino platform and an Arduino, I was able to actively and dynamically drive the actuation of the air bladder as well as an embedded led. These both then responded to active capacitive input from copper pads below. This combination helped show how the composite’s light permeability is changed through the actuation and resulting separation of the panels in the rigid layer.

uniMorph is an enabling technology for rapid digital fabrication of customized thin-film shape-changing interfaces, developed primarily by my friend & labmate Felix Heibeck. In this project, I was the Robin to his Batman, providing design, film, and intellectual support.

uniMorph

Fabricating thin-sheet shape-changing interfaces

By combining the thermoelectric characteristics of copper with the high thermal expansion rate of ultra-high molecular weight polyethylene, we are able to actuate the shape of flexible circuit composites directly. The shape-changing actuation is enabled by a temperature driven mechanism and reduces the complexity of fabrication for thin shape-changing interfaces.

uniMorph composites can be actuated by either environmental temperature changes or active heating of embedded structures and can actuate in different shape-changing primitives. Different sensing techniques that leverage the existing copper structures can be seamlessly embedded into the uniMorph composite.

Responsive hardware interfaces seek to incorporate the lessons and design patterns we’ve discovered in the interactive digital world and bringing them back to the physical--from hover states to context sensitivity.

While traditionally a context shift in responsive design relies upon a change in the user’s device or screen size, there are much more impactful determinants of context such as the user’s locus of attention, their emotional state, and even their current kinesthetic relationship with the interface.

Here, I've created a prototype that represents but an instance of this. The Clutch Knob senses gaze and adapts both a visual & physical interface to match the acuity of the user depending on their direct or indirect attention.

Clutch Knob

Gaze-aware volume knob for automotive use

You could imagine using this type of interface augmentation in a car dashboard. The interface allows speediness, precision, and expertise when the driver is paying full attention to the interface: looking at the screen provides him with smaller, more fine-grain volume adjustments and the physical knob adjusts its tactile detents to be more dense to match. Looking away while using the same interface forces it to adjust to your lower level of precision & attention by increasing font-size, removing complex UI elements, and physically increasing the detent spacing.

I worked with many from our research group to develop a hybrid physical display method using optical fibers. By adding them, one can unify the input sensing and new visual output functionalities in one material, to create a dynamic texture display called Pneuxel.

Optielastic

Custom-built Processing visualizer with realtime haptic output

Pneuxel is made of five by five individually controllable cells that can light up through an optical waveguide composite. I created a Processing app that can import specially-designed animated GIFS, preview them in 3D in software, and play them back in realtime on the Pneuxel display.